Container Network Interface (CNI)

This page show you how to install the CNI plugins on your Linux distribution and configure your Nomad clients to use the plugins.

Introduction

Nomad implements custom networking through a combination of CNI plugin binaries and CNI configuration files. You should install the CNI reference plugins because certain Nomad networking features, like bridge network mode and Consul service mesh, leverage the plugins to provide an operating-system agnostic interface to configure workload networking.

You need to install CNI network plugins on each client.

Requirements

- You are familiar with CNI plugins.

- You are familiar with how Nomad supports Container Network Interface (CNI) plugins.

- You are running Nomad on Linux.

CNI plugins and bridge networking workflow

Perform the following on each Nomad client:

- Install CNI Reference plugins.

- Configure bridge module to route traffic through iptables.

- Verify cgroup controllers.

- Create a bridge mode configuration.

- Configure Nomad clients.

Install CNI reference plugins

Nomad uses CNI plugins to configure network namespaces when using the bridge

network mode. You must install the CNI plugins on all Linux Nomad client nodes that use network namespaces. Refer to the CNI Plugins Overview guide for details on individual plugins.

The following determines your operating system architecture, downloads the 1.5.1 release, and extracts the CNI plugin binaries into the /opt/cni/bin directory. Update the CNI_PLUGIN_VERSION value if you want to use a different release version.

$ export ARCH_CNI=$( [ $(uname -m) = aarch64 ] && echo arm64 || echo amd64)

$ export CNI_PLUGIN_VERSION=v1.5.1

$ curl -L -o cni-plugins.tgz "https://github.com/containernetworking/plugins/releases/download/${CNI_PLUGIN_VERSION}/cni-plugins-linux-${ARCH_CNI}-${CNI_PLUGIN_VERSION}".tgz && \

sudo mkdir -p /opt/cni/bin && \

sudo tar -C /opt/cni/bin -xzf cni-plugins.tgz

Configure bridge module to route traffic through iptables

Nomad's task group networks and Consul Connect integration use bridge networking and iptables to send traffic between containers. The Linux kernel bridge module has three "tunables" that control whether iptables process traffic crossing the bridge. Some operating systems (RedHat, CentOS, and Fedora in particular) configure these tunables to optimize for VM workloads where iptables rules might not be correctly configured for guest traffic.

Ensure your Linux operating system distribution has been configured to allow iptables to route container traffic through the bridge network. Set these tunables to allow iptables processing for the bridge network.

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-arptables

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

To preserve these settings on startup of a client node, add a file including the following to /etc/sysctl.d/ or remove the file your Linux distribution puts in that directory.

/etc/sysctl.d/bridge.conf

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

Verify cgroup controllers

On Linux, Nomad uses cgroups to control access to resources like CPU and

memory. Nomad supports both cgroups v2 and the legacy cgroups

v1. When Nomad clients start, they determine the available cgroup controllers and include the attribute os.cgroups.version in their fingerprint.

Nomad can only use cgroups to control resources if all the required controllers are available. If one or more required cgroups are not available, Nomad disables resource controls that require cgroups entirely. You most often see missing controllers on platforms used outside of datacenters, such as Raspberry Pi or similar hobbyist computers.

On cgroups v2, you can verify that you have all required controllers.

$ cat /sys/fs/cgroup/cgroup.controllers

cpuset cpu io memory pids

On legacy cgroups v1, look for this same list of required controllers as directories under the directory /sys/fs/cgroup.

To enable missing cgroups, add the appropriate boot command line arguments. For

example, to enable the cpuset cgroup, add cgroup_cpuset=1 cgroup_enable=cpuset. Add these arguments wherever specified by your bootloader.

Refer to the cgroup controller requirements for more details.

Create bridge mode configuration

Nomad itself uses CNI plugins and configuration as the underlying implementation for the bridge network mode, using the loopback, bridge, firewall, and portmap CNI plugins configured together to create Nomad's bridge network.

Nomad uses the following configuration template when setting up a bridge network. The template placeholders have been replaced with the default configuration values for bridge_network_name, bridge_network_subnet, and an internal constant that provides the value for iptablesAdminChainName. You can use this template as a basis for your own CNI-based bridge network configuration in cases where you need access to an unsupported option in the default configuration, like hairpin mode. When making your own bridge network based on this template, ensure that you change the iptablesAdminChainName to a unique value for your configuration.

Refer to the CNI Specification for more information on this configuration format.

Create your configuration in the /opt/cni/config directory.

{

"cniVersion": "1.1.0",

"name": "nomad",

"plugins": [

{

"type": "loopback"

},

{

"type": "bridge",

"bridge": "nomad",

"ipMasq": true,

"isGateway": true,

"forceAddress": true,

"hairpinMode": false,

"ipam": {

"type": "host-local",

"ranges": [

[

{

"subnet": "172.26.64.0/20"

}

]

],

"routes": [

{ "dst": "0.0.0.0/0" }

]

}

},

{

"type": "firewall",

"backend": "iptables",

"iptablesAdminChainName": "NOMAD-ADMIN"

},

{

"type": "portmap",

"capabilities": {"portMappings": true},

"snat": true

}

]

}

For a more thorough understanding of this configuration, consider each CNI plugin's configuration in turn.

loopback

The loopback plugin sets the default local interface, lo0, created inside the bridge network's network namespace to UP. This allows workload running inside the namespace to bind to a namespace-specific loopback interface.

bridge

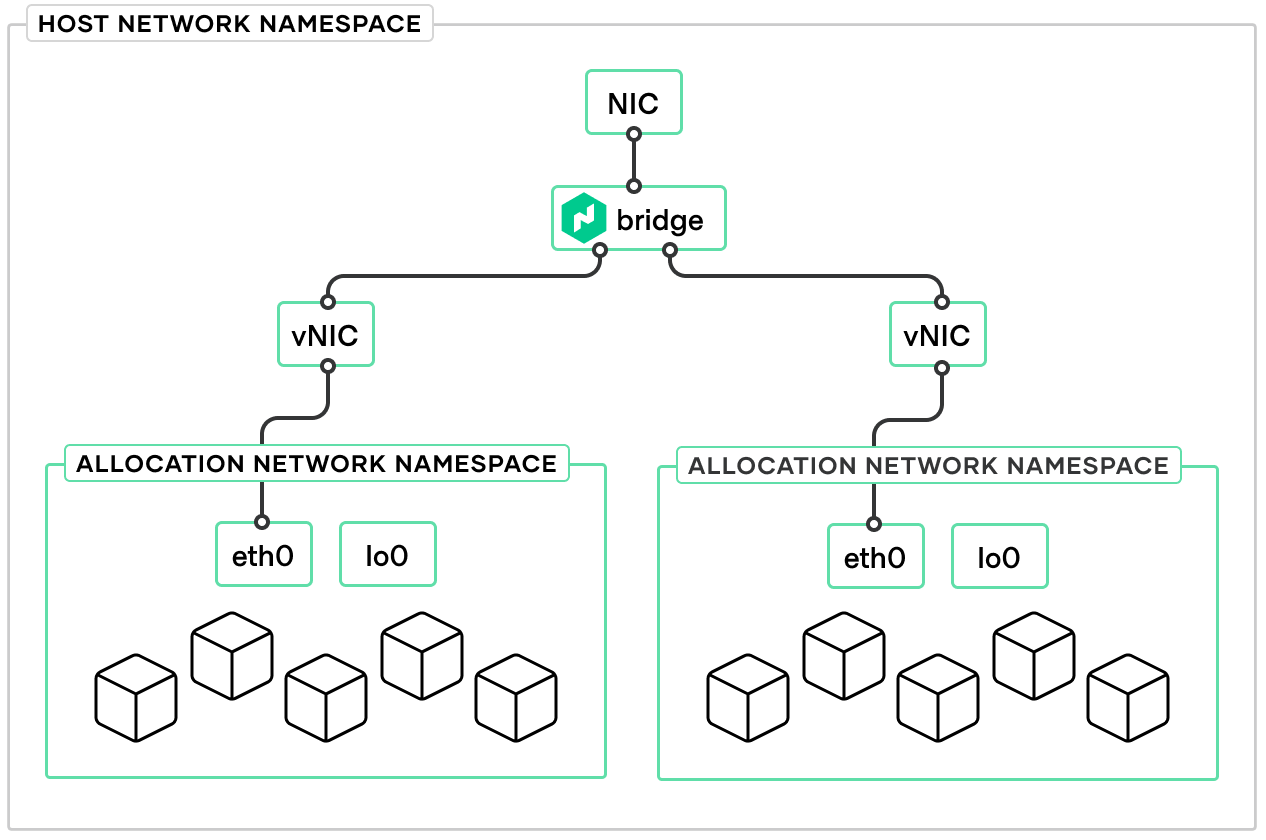

The bridge plugin creates a bridge (virtual switch) named nomad that resides in the host network namespace. Because this bridge is intended to provide network connectivity to allocations, it is configured to be a gateway by setting isGateway to true. This tells the plugin to assign an IP address to the bridge interface

The bridge plugin connects allocations (on the same host) into a bridge (virtual switch) that resides in the host network namespace. By default Nomad creates a single bridge for each client. Since Nomad's bridge network is designed to provide network connectivity to the allocations, it configures the bridge interface to be a gateway for outgoing traffic by providing it with an address using an ipam configuration. The default configuration creates a host-local address for the host side of the bridge in the 172.26.64.0/20 subnet at

172.26.64.1. When associating allocations to the bridge, it creates addresses for the allocations from that same subnet using the host-local plugin. The configuration also specifies a default route for the allocations of the host-side bridge address.

firewall

The firewall plugin creates firewall rules to allow traffic to/from the allocation's IP address via the host network. Nomad uses the iptables backend for the firewall plugin. This configuration creates two new iptables chains, CNI-FORWARDandNOMAD-ADMIN`, in the filter table and add rules that allow the given interface to send/receive traffic.

The firewall creates an admin chain using the name provided in the iptablesAdminChainName attribute. For this case, it's called NOMAD-ADMIN. The admin chain is a user-controlled chain for custom rules that run before rules managed by the firewall plugin. The firewall plugin does not add, delete, or modify rules in the admin chain.

A new chain, CNI-FORWARD is added to the FORWARD chain. CNI-FORWARD is the chain where rules will be added when allocations are created and from where rules will be removed when those allocations stop. The CNI-FORWARD chain first sends all traffic to NOMAD-ADMIN chain.

Use the following command to list the iptables rules present in each chain.

$ sudo iptables -L

portmap

Nomad needs to be able to map specific ports from the host to tasks running in the allocation namespace. The portmap plugin forwards traffic from one or more

ports on the host to the allocation using network address translation (NAT)

rules.

The plugin sets up two sequences of chains and rules:

- One “primary”

DNAT(destination NAT) sequence to rewrite the destination. - One

SNAT(source NAT) sequence that will masquerade traffic as needed.

You can use the following command to list the iptables rules in the NAT table.

$ sudo iptables -t nat -L

Configure Nomad clients

The CNI specification defines a network configuration format for administrators. It contains directives for both the orchestrator and the plugins to consume. At plugin execution time, this configuration format is interpreted by Nomad and transformed into arguments for the plugins.

Nomad reads the following files from the cni_config_dir parameter — /opt/cni/config by default:

.conflist: Nomad loads these files as network configurations that contain a list of plugin configurations..confand.json: Nomad loads these files as individual plugin configurations for a specific network.

Add the cni_path and cni_config_dir parameters to each client's client.hcl file.

/etc/nomad.d/client.hcl

client {

enabled = true

cni_path = "/opt/cni/bin"

cni_config_dir = "/opt/cni/config"

}

Use CNI networks with Nomad jobs

To specify that a job should use a CNI network, set the task group's network mode attribute to the value cni/<your_cni_config_name>. Nomad then schedules the workload on client nodes that have fingerprinted a CNI configuration with the given name. For example, to use the configuration named mynet, you should set the task group's network mode to cni/mynet. Nodes that have a network configuration defining a network named mynet in their cni_config_dir are eligible to run the workload.